Introducing Full-Stack Alignment

We're announcing an ambitious research program to co-align AI systems and the institutions that embed them with what people actually value.

A New Vision for Our Future

What will the future look like? Will it be decentralized, sustainable, or a brutalist maximization of energy? We are currently facing a profound shortage of compelling social visions for the age of AGI. Existing proposals often feel unappetizing, either failing to inspire or lacking concrete ideas for implementation. This is why today, we're introducing Full-Stack Alignment: a vision of a society where institutions are aligned with what truly matters to us, and a collaborative research program offering concrete pathways to achieve it.

The Problem: A Social Stack That Loses Our Values

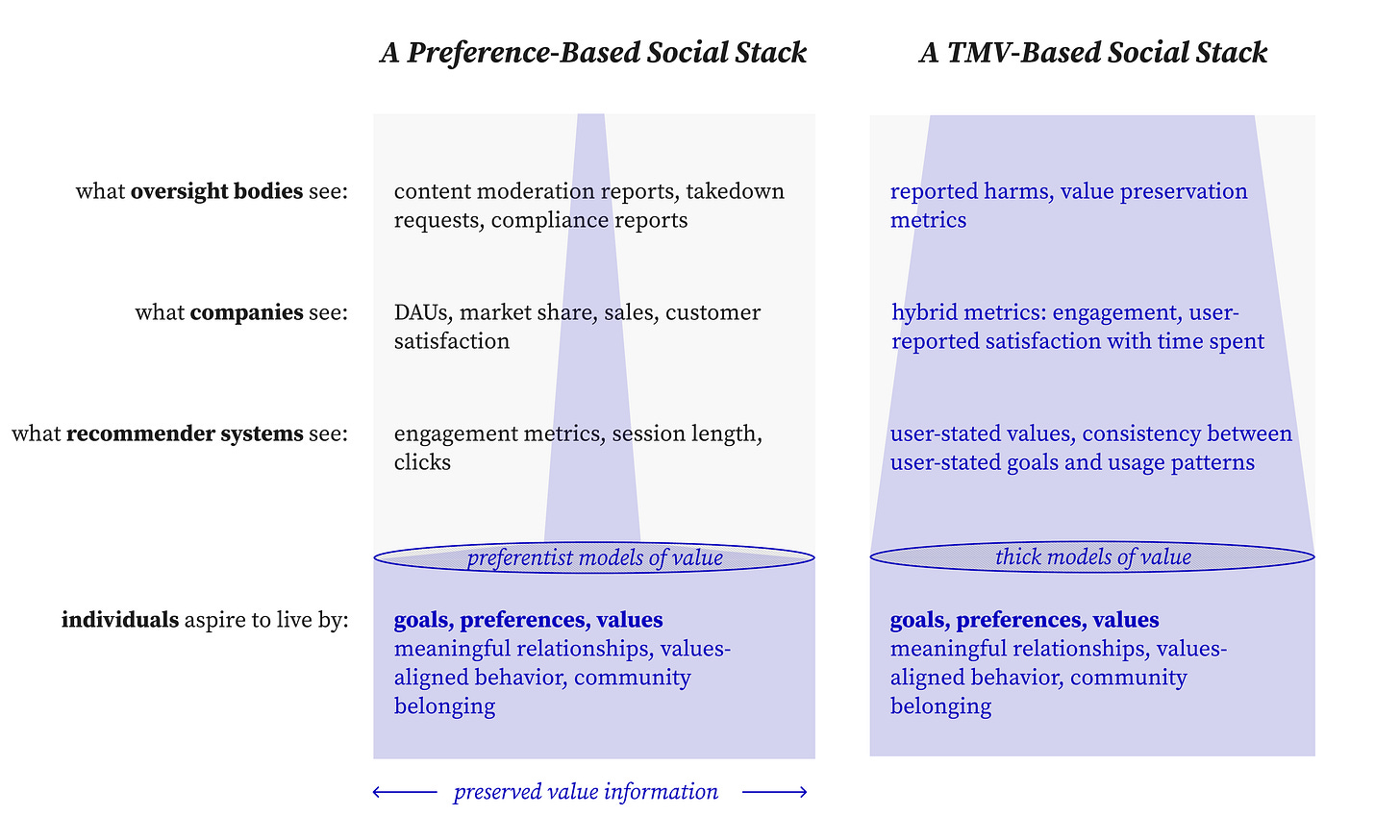

Our society runs on a "stack" of interconnected systems—from our individual lives up through the companies we work for and the institutions that govern us. Right now, this stack is broken. It loses what's most important to us.

Look at the left side of the chart. At the bottom, we as individuals have rich goals, values, and a desire for things like meaningful relationships and community belonging. But as that desire travels up the stack, it gets distorted.

To a recommender system, our search for connection is flattened into engagement metrics and clicks.

To the company that owns the system, this becomes Daily Active Users (DAUs) and market share.

To oversight bodies, all that remains are abstract takedown requests and compliance reports.

At each level, crucial information is lost. The richness of human value is compressed into a thin, optimizable metric.

And this isn't just about social media. This value-loss happens everywhere.

In education, a student’s love of learning and a parent's desire for a well-rounded child become flattened into standardized test scores and school rankings.

In healthcare, a patient’s goal for holistic well-being is reduced to billable procedures and narrow biomarker targets.

In our work, the pursuit of craftsmanship and professional pride is replaced by abstract Key Performance Indicators (KPIs).

In domain after domain, what is meaningful is replaced by what is measurable. So why does this keep happening? The problem is systemic, stemming from a few core failures:

Incentives get distorted. As we've seen, what starts as a human value gets warped by the incentives at each level of the stack, turning rich goals into simplistic metrics.

We lose sight of our collective goals. The focus on simple metrics makes it hard to even express our shared, deeper goals. In education, for instance, intense focus on test scores means we stop having a collective conversation about what it means to produce creative and well-rounded citizens.

Our ability to course-correct is too slow. Democratic feedback and regulation operate on the timescale of years. Technology, and now AI, evolves on the timescale of weeks or days. Our steering mechanisms are no match for the speed of the systems they are meant to guide.

This is why our current systems can feel addictive, isolating, and polarizing, even when every component is "working" as intended. As AI becomes more deeply embedded in this landscape, this distortion will only accelerate.

A New Foundation: Thick Models of Value

This problem exists because our current tools for designing AI and institutions are too primitive. They either reduce our values to simple preferences (like clicks) or rely on vague text commands ("be helpful") that are open to misinterpretation and manipulation.

In the paper, we set out a new paradigm: Thick Models of Value (TMV).

Think of two people you know that are fighting, or think of two countries like Israel and Palestine, Russia and Ukraine. You can think of each such fight as a search for a deal that would satisfy both sides, but often currently this search fails. We can see why it fails: The searches we do currently in this space are usually very narrow. Will one side pay the other side some money or give up some property?Instead of being value-neutral, TMV takes a principled stand on the structure of human values, much like grammar provides structure for language or a type system provides structure for code. It provides a richer, more stable way to represent what we care about, allowing systems to distinguish an enduring value like "honesty" from a fleeting preference, an addiction, or a political slogan.

This brings us to the right side of the chart. In a TMV-based social stack, value information is preserved.

Our desire for connection is understood by the recommender system through user-stated values and the consistency between our goals and actions.

Companies see hybrid metrics that combine engagement with genuine user satisfaction and well-being.

Oversight bodies can see reported harms and value preservation metrics, giving them a true signal of a system's social impact.

By preserving this information, we can build systems that serve our deeper intentions.

The Vision: What a Full-Stack Aligned Future Looks Like

This isn't just a technical fix; it's a social vision for a world where our technology and institutions co-evolve to help us flourish. What does this future look like in practice?

AI That Serves Your Autonomy. Imagine an AI assistant that helps you pursue "health." Instead of just optimizing your biomarkers for longevity, it understands you value the vitality and joy of physical activity and helps you integrate that into your life in a way you'd endorse upon reflection. It becomes a steward for your values, not a manipulator of your preferences.

An Economy That Prices in Meaning. Imagine an economic system where companies are rewarded for genuinely improving lives. A fitness provider wouldn't be paid based on membership fees but on their members' sustained vitality. An education platform would be rewarded for fostering curiosity and creativity, not just for test scores.

Democracy at the Speed of AI. Imagine democratic institutions that can respond to rapid change without sacrificing legitimacy. Instead of relying on slow, surface-level polls, AI-powered representatives could operate from a deep, structured understanding of their constituents' core values, allowing for nimble, real-time negotiation that is still grounded in the public will.

Join Us in Building This Future

This is more than a thought experiment. It is a collaborative research initiative to co-align AI systems and institutions with what people genuinely value. We are a group of 30+ researchers committed to this goal.

Together, we just published:

A position paper that articulates the conceptual foundations of Full-Stack Alignment and Thick Models of Value. This is the best place to start if you want to understand this new line of work: Read it here.

A website that will be the homepage for this research program going forward: full-stack-alignment.ai.

We will post our progress and requests for proposals on the website and on our Substack.

Stay tuned—we are just getting started.

So excited to read the paper!

Great - I was looking forward to an update and one came out today. I signed up on the email list but was worried things stopped. Glad to hear!